Over the last 2-3 years, artificial intelligence (AI) agents have become more embedded in the software development process. According to Statista, three out of four developers, or around 75%, use GitHub Copilot, OpenAI Codex, ChatGPT, and other generative AI in their daily chores.

Nonetheless, while AI shows promise in directing software development duties, it creates a wave of legal uncertainty.

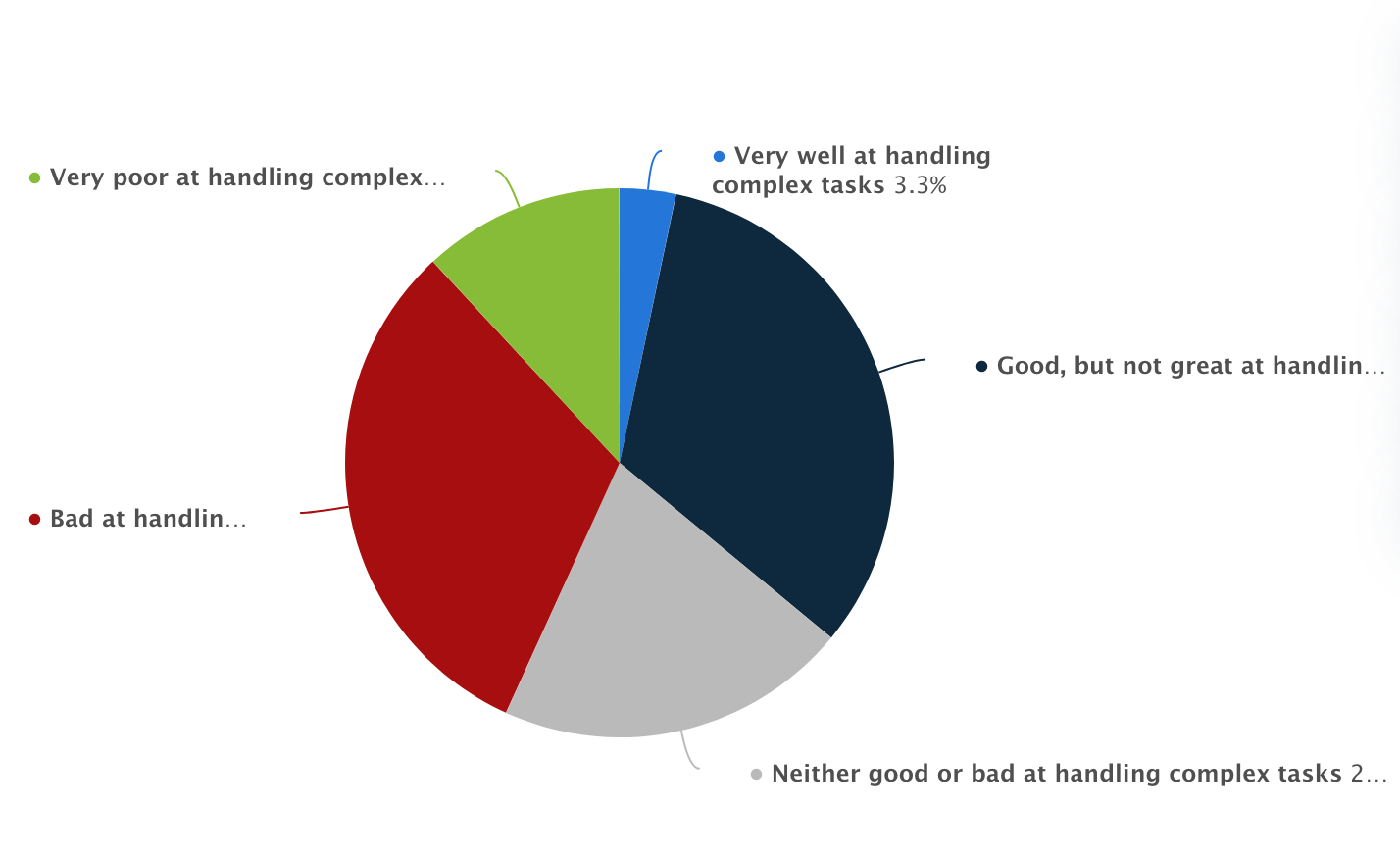

Ability of Artificial Intelligence in Managing Complex Tasks, Statista

Who owns the code written by an AI? What happens if AI-made code infringes on someone else’s intellectual property? And what are the privacy risks when commercial data is processed through AI models?

To answer all these burning questions, we’ll explain how AI development is regarded from the legal side, especially in outsourcing cases, and dive into all considerations companies should understand before allowing these tools to integrate into their workflows.

What Is AI in Custom Software Development?

The market for AI technologies is vast, amounting to around $244 billion in 2025. Generally, AI is divided into machine learning and deep learning and further into natural language processing, computer vision, and more.

In software development, AI tools refer to intelligent systems that can assist or automate parts of the programming process. They can suggest lines of code, complete functions, and even generate entire modules depending on context or prompts provided by the developer.

In the context of outsourcing projects—where speed is no less important than quality—AI technologies are quickly becoming staples in development environments.

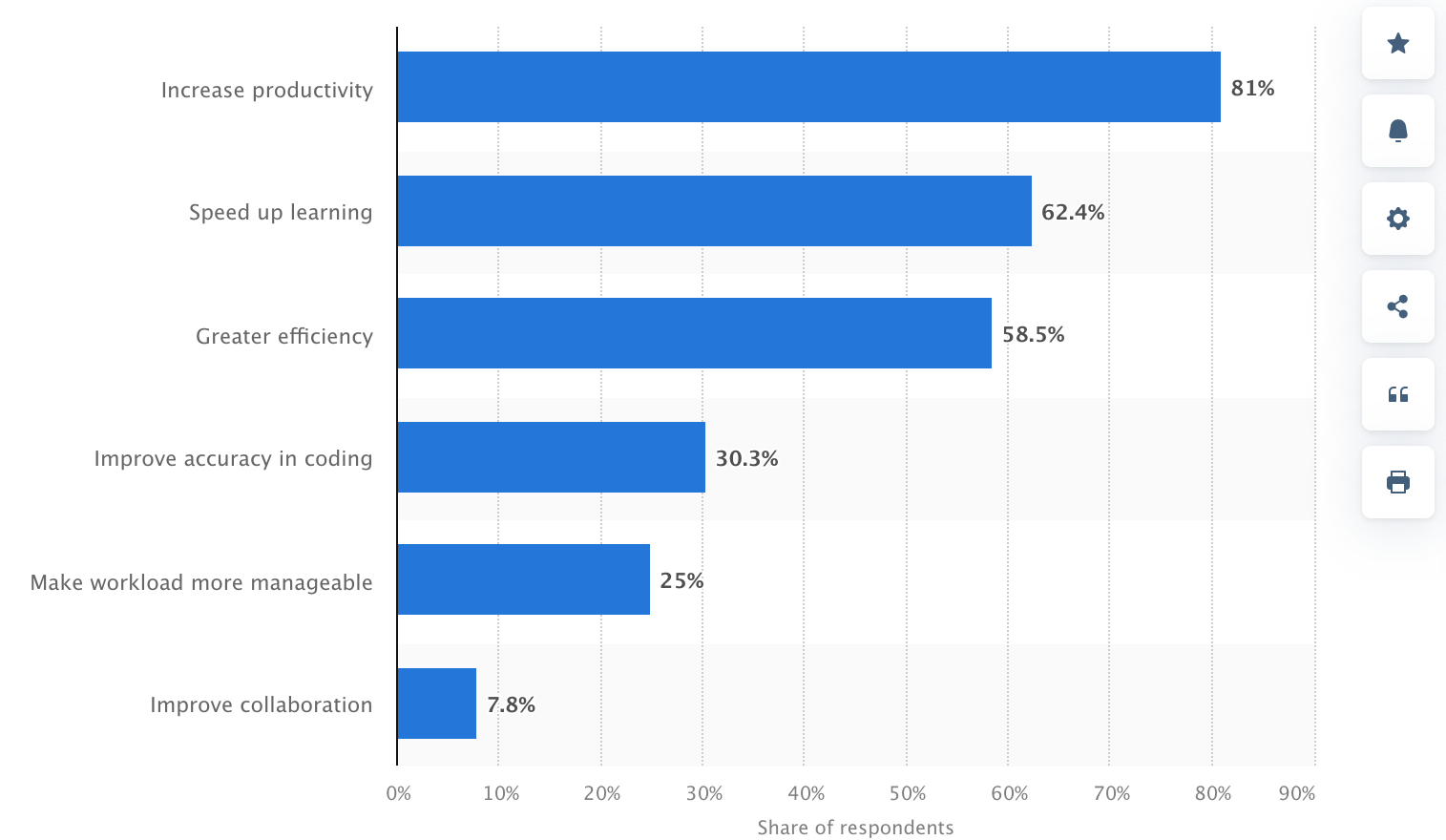

They raise productivity by taking redundant tasks, decrease the time spent on boilerplate code, and assist developers who may be working in unfamiliar frameworks or languages.

Benefits of Using Artificial Intelligence, Statista

How AI Tools Can Be Integrated in Outsourcing Projects

Artificial intelligence in 2025 has become the desired skill for nearly all technical professions.

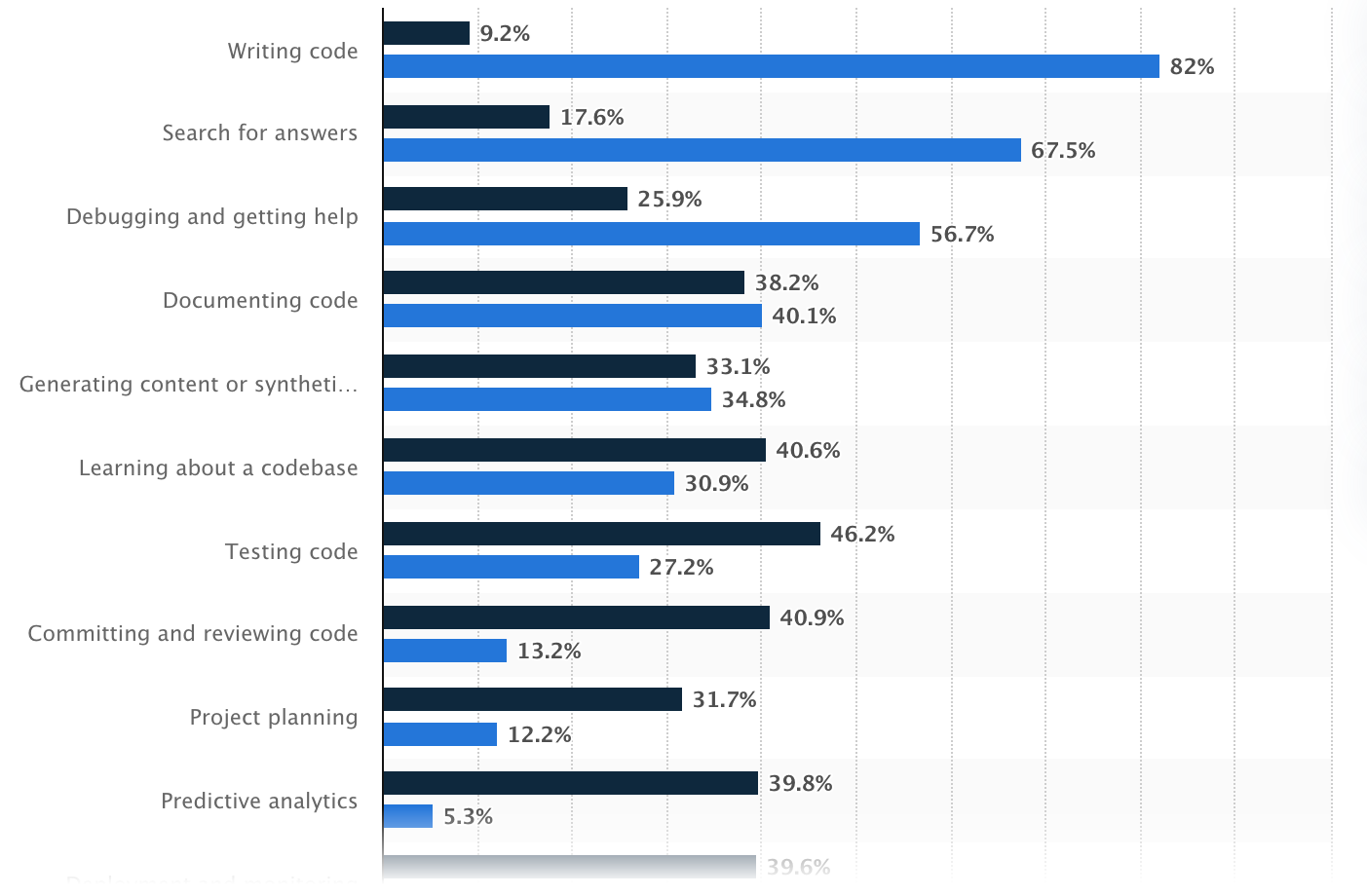

While the rare bartender or plumber may not require AI mastery to the same level, it has become clear that adding an AI skill to a software developer’s arsenal is a must because in the context of software development outsourcing, AI tools can be used in many ways:

- Code Generation: GitHub Copilot and other AI tools assist outsourced developers in coding by making hints or auto-filling functions as they code.

- Bug Detection: Instead of waiting for human verification in software testing, AI can flag errors or harmful code so teams can fix flaws before they become irreversible issues.

- Writing Tests: AI can independently generate test cases from the code, thus the testing becomes quicker and more exhaustive.

- Documentation Support: AI can leave comments and draw up documentation explaining what the code does.

- Multi-language Support: If the project needs to switch programming languages, AI can help translate or re-write segments of code in order to minimize the need for specialized knowledge for every programming language.

Most popular uses of AI in the development, Statista

Legal Implications of Using AI in Custom Software Development

AI tools can be incredibly helpful in software development, especially when outsourcing. But using them also raises some legal questions businesses need to be aware of, mainly around ownership, privacy, and responsibility.

Intellectual Property (IP) Issues

When developers use AI tools like GitHub Copilot, ChatGPT, or other code-writing assistants, it’s natural to ask: Who actually owns the code that gets written? This is one of the trickiest legal questions right now.

Currently, there’s no clear global agreement. In general, AI doesn’t own anything, and the developer who uses the tool is considered the “author,” still, this may vary.

The catch is that AI tools learn from tons of existing code on the internet. Sometimes, they generate code that’s very similar (or even identical) to the code they were trained on, including open-source projects.

If that code is copied too closely, and it’s under a strict open-source license, you could run into legal problems, especially if you didn’t realize it or follow the license rules.

Outsourcing can make it even more problematic. If you’re working with an outsourcing team and they use AI tools during development, you need to be extra clear in your contracts:

- Who owns the final code?

- What happens if the AI tool accidentally reuses licensed code?

- Is the outsourced team allowed to use AI tools at all?

To 100% stay on the safe side, you can:

- Make sure contracts clearly state who owns the code.

- Double-check that the code doesn’t violate any licenses.

- Consider using tools that run locally or limit what the AI sees to avoid leaking or copying restricted content.

Data Security and Privacy

When using AI tools in software development, especially in outsourcing, another major consideration is data privacy and security. So what’s the risk?

The majority of AI tools like ChatGPT, Copilot, and others often run in the cloud, which means the information developers put into them may be transmitted to outer servers.

If developers copy and paste proprietary code, login credentials, or commercial data into these tools, that information could be retained, reused, and later revealed. The situation becomes even worse if:

- You’re giving confidential enterprise records

- Your project concerns customer or user details

- You’re in a regulated industry such as healthcare or finance

So what does the law say regarding it? Indeed, different countries have different regulations, but the most noticeable are:

- GDPR (Europe): In simple terms, GDPR protects personal data. If you gather data from people in the EU, you have to explain what you’re collecting, why you need it, and get their permission first. People can ask to see their data, rectify anything wrong, or have it deleted.

- HIPAA (US, healthcare): HIPAA covers private health information and medical records. Submitting to HIPAA, you can’t just paste anything related to patient documents into an AI tool or chatbot—especially one that runs online. Also, if you work with other companies (outsourcing teams or software vendors), they need to follow the same decrees and sign a special agreement to make it all legal.

- CCPA (California): CCPA is a privacy law that gives people more control over their personal info. If your business collects data from California residents, you have to let them know what you’re gathering and why. People can ask to see their data, have it deleted, or stop you from sharing or selling it. Even if your company is based somewhere else, you still have to follow CCPA if you’re processing data from people in California.

The most obvious and logical question here is how to protect data. First, don’t put anything sensitive (passwords, customer info, or private company data) into public AI tools unless you’re sure they’re safe.

For projects that concern confidential information, it’s better to use AI assistants that run on local computers and don’t send anything to the internet.

Also, take a good look at the contracts with any outsourcing partners to make sure they’re following the right practices for keeping data safe.

Accountability and Responsibility

AI tools can carry out many tasks but they don’t take responsibility when something goes wrong. The blame still falls on people: the developers, the outsourcing team, and the business that owns the project.

If the code has a flaw, creates a safety gap, or causes damage, it’s not the AI’s guilt—it’s the people using it who are responsible. If no one takes ownership, small compromises can turn into large (and expensive) issues.

To avoid this situation, businesses need clear directions and human oversight:

- Always review AI-generated code. It’s just a starting point, not a finished product. Developers still need to probe, debug, and verify every single part.

- Assign responsibility. Be it an in-house team or an outsourced partner, make sure someone is clearly responsible for quality control.

- Include AI in your contracts. Your agreement with an outsourcing provider should say:

- Whether they can apply AI tools.

- Who is responsible for reviewing the AI’s work.

- Who pays for fixes if something goes wrong because of AI-generated code.

- Keep a record of AI usage. Document when and how AI tools are utilized, especially for major code contributions. That way, if problems emerge, you can trace back what happened.

Case Studies and Examples

AI in software development is already a common practice used by many tech giants though statistically, smaller companies with fewer employees are more likely to use artificial intelligence than larger companies.

Below, we have compiled some real-world examples that show how different businesses are applying AI and the lessons they’re learning along the way.

Nabla (Healthcare AI Startup)

Nabla, a French healthtech company, integrated GPT-3 (via OpenAI) to assist doctors with writing medical notes and summaries during consultations.

How they use it:

- AI listens to patient-doctor conversations and creates structured notes.

- The time doctors spend on admin work visibly shrinks.

Legal & privacy actions:

- Because they operate in a healthcare setting, Nabla intentionally chose not to use OpenAI’s API directly due to concerns about data privacy and GDPR compliance.

- Instead, they built their own secure infrastructure using open-source models like GPT-J, hosted locally, to ensure no patient data leaves their servers.

Lesson learned: In privacy-sensitive industries, using self-hosted or private AI models is often a safer path than relying on commercial cloud-based APIs.

Replit and Ghostwriter

Replit, a collaborative online coding platform, developed Ghostwriter, its own AI assistant similar to Copilot.

How it’s used:

- Ghostwriter helps users (including beginners) write and complete code right in the browser.

- It’s integrated across Replit’s development platform, often used in education and startups.

Challenge:

- Replit has to balance ease of use with license compliance and transparency.

- The company provides disclaimers encouraging users to review and edit the generated code, underlining it is only a tip.

Lesson learned: AI-generated code is powerful but not always safe to use “as is.” Even platforms that build AI tools themselves push for human review and caution.

Amazon’s Internal AI Coding Tools

Amazon has developed its own internal AI-powered tools, similar to Copilot, to assist its developers.

How they use it:

- AI helps developers write and review code across multiple teams and services.

- It’s used internally to improve developer productivity and speed up delivery.

Why they don’t use external tools like Copilot:

- Amazon has strict internal policies around intellectual property and data privacy.

- They prefer to build and host tools internally to sidestep legal risks and protect proprietary code.

Lesson learned: Large enterprises often avoid third-party AI tools due to concerns about IP leakage and loss of control over susceptible data.

How to Safely Use AI Tools in Outsourcing Projects: General Recommendations

Using AI tools in outsourced development can bring faster delivery, lower costs, and coding productivity. But to do it safely, companies need to set up the right processes and protections from the start.

First, it’s important to make AI usage expectations clear in contracts with outsourcing partners. Agreements should specify whether AI tools can be used, under what circumstances, and who is responsible for reviewing and validating AI-generated code.

These contracts should also include strong intellectual property clauses, spelling out who owns the final code and what happens if AI accidentally introduces open-source or third-party licensed content.

Data protection is another critical concern. If developers use AI tools that send data to the cloud, they must never input sensitive or proprietary information unless the tool complies with GDPR, HIPAA, or CCPA.

In highly regulated industries, it’s always safer to use self-hosted AI models or versions that run in a controlled environment to minimize the risk of data openness.

To avoid legal and quality issues, companies should also implement human oversight at every stage. AI tools are great for advice, but they don’t understand business context or legal requirements.

Developers must still test, audit, and reanalyze all code before it goes live. Establishing a code review workflow where senior engineers double-check AI output guarantees safety and accountability.

It’s also wise to document when and how AI tools are used in the development process. Holding a record helps trace back the source of any future defects or legal matters and shows good faith in regulatory audits.

Finally, make sure your team (or your outsourcing partner’s team) receives basic training in AI best practices. Developers should understand the limitations of AI suggestions, how to detect licensing risks, and why it’s important to validate code before shipping it.

FAQ

Q: Who owns the code generated by AI tools?

Ownership usually goes to the company commissioning the software—but only if that’s clearly stated in your agreement. The complication comes when AI tools generate code that resembles open-source material. If that content is under a license, and it’s not attributed properly, it could raise intellectual property issues. So, clear contracts and manual checks are key.

Q: Is AI-generated code safe to use as-is?

Not always. AI tools can accidentally reproduce licensed or copyrighted code, especially if they were trained on public codebases. While the suggestions are useful, they should be treated as starting points—developers still need to review, edit, and verify the code before it’s used.

Q: Is it safe to input sensitive data into AI tools like ChatGPT?

Usually, no. Unless you’re using a private or enterprise version of the AI that guarantees data privacy, you shouldn’t enter any confidential or proprietary information. Public tools process data in the cloud, which could expose it to privacy risks and regulatory violations.

Q: What data protection laws should we consider?

This depends on where you operate and what kind of data you handle. In Europe, the GDPR requires consent and transparency when using personal data. In the U.S., HIPAA protects medical data, while CCPA in California gives users control over how their personal information is collected and deleted. If your AI tools touch sensitive data, they must comply with these regulations.

Q: Who is responsible if AI-generated code causes a problem?

Ultimately, the responsibility falls on the development team—not the AI tool. That means whether your team is in-house or outsourced, someone needs to validate the code before it goes live. AI can speed things up, but it can’t take responsibility for mistakes.

Q: How can we safely use AI tools in outsourced projects?

Start by putting everything in writing: your contracts should cover AI usage, IP ownership, and review processes. Only use trusted tools, avoid feeding in sensitive data, and make sure developers are trained to use AI responsibly. Most importantly, keep a human in the loop for quality assurance.

Q: Does SCAND use AI for software development?

Yes, but provided that the client agrees. If public AI tools are licensed, we use Microsoft Copilot in VSCode and Cursor IDE, with models like ChatGPT 4o, Claude Sonnet, DeepSeek, and Qwen. If a client requests a private setup, we use local AI assistants in VSCode, Ollama, LM Studio, and llama.cpp, with everything stored on secure machines.

Q: Does SCAND use AI to test software?

Yes, but with permission from the client. We use AI tools like ChatGPT 4o and Qwen Vision for automated testing and Playwright and Selenium for browser testing. When required, we automatically generate unit tests using AI models in Copilot, Cursor, or locally available tools like Llama, DeepSeek, Qwen, and Starcoder.