The AI platform Clarifai is attempting to make it easier for companies to leverage their existing cloud and hardware investments for AI. Clarifai already offers tools to assist throughout the whole AI lifecycle, including data labeling, training, evaluation, workflows, and feedback.

Now in public preview, Compute Orchestration provides companies with a single control plane for governing access to AI resources, monitoring performance, and managing costs.

Customers can deploy any model using any hardware vendor in any cloud, on-premises, air-gapped, or SaaS environments, which is a major expansion of the deployment options Clarifai offers.

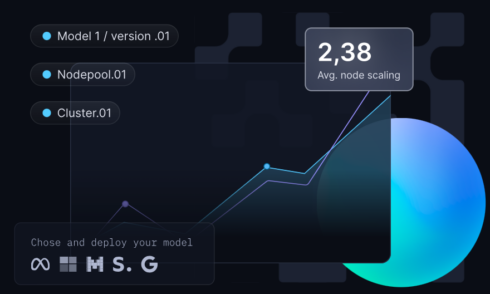

According to Clarifai, Compute Orchestration uses clusters and nodepools to organize and manage compute resources. A compute cluster is the “overarching computational environment where models are executed,” while nodepools are a set of dedicated nodes within a cluster that share similar configurations.

“Cluster configuration lets you specify where and how your models are run, ensuring better performance, lower latency, and adherence to regional regulations. You can specify a cloud provider, such as AWS, that will provide the underlying compute infrastructure for hosting and running your models. You can also specify the geographic location of the data center where the compute resources will be hosted,” Clarifai wrote in its documentation.

Compute Orchestration leverages capabilities like GPU fractioning, batching, autoscaling, and spot instances to automatically optimize resource usage. According to the company, Compute Orchestration can reduce compute usage by 3.7x, support more than 1.6 million inference requests per second, and offers 99.9997% reliability under extreme load. It can also reduce costs by 60% to 90%, depending on the configuration.

Customers can also deploy into a VPC or on-premises Kubernetes clusters without needing to open any inbound ports, set up VPC peering, or create custom IAM roles, which helps companies maintain security and flexibility.

“This new platform capability brings the convenience of serverless autoscaling to any environment, regardless of deployment location or hardware, and dynamically scales resources to meet workload demands. Clarifai handles the containerization, model packing, time slicing, and other performance optimizations on your behalf,” Clarifai stated in its documentation.