Embodied AI involves embedding artificial intelligence into tangible entities, such as robots, equipping them with the capacity to perceive, learn from, and engage dynamically with their surroundings [1]. This approach facilitates robots in evolving and adapting to environmental changes. A notable instance of this is the Figure AI humanoid, which leverages OpenAI’s cutting-edge technologies. It showcases the humanoid’s advanced ability to comprehend its environment and respond aptly to various stimuli, marking a significant stride in the development of intelligent, interactive machines. In this article we review the computing challenges of embodied AI and explore a few research directions for building computing systems for embodied AI.

Computing Challenges of Embodied AI

Although embodied AI has demonstrated its enormous potential in shaping our future economy, embodied AI is extremely demanding on computing in order to achieve flexibility, computing efficiency, and scalability, we summarize the current computing challenges of embodied AI below:

Complex Software Stack Challenge: Complexity breeds inflexibility. embodied AI systems must integrate a diverse array of functionalities, from environmental perception and engaging in physical interactions to executing complex tasks. This integration involves harmonizing various components such as sensor data analysis, sophisticated algorithmic processing, and precise control over actuators. Furthermore, to cater to the broad spectrum of robotic forms and their associated tasks, a versatile embodied AI software stack is essential. Achieving cohesive operation across these diverse elements within a singular software architecture introduces significant complexity, elevating the challenge of creating a seamless and efficient software ecosystem.

Inadequate Computing Architecture: Current computational frameworks are inadequate for the intricate demands of embodied AI. The requisite for real-time processing of vast data streams, coupled with the need for high concurrency, unwavering reliability, and energy efficiency, poses a significant challenge. These limitations hinder the potential for robots to function optimally in multifaceted and dynamic environments, underscoring the urgent need for innovative computing architectures tailored to the nuanced requirements of embodied AI.

Data Bottleneck Obstacle: Lack of data limits scalability. The evolution and refinement of embodied AI systems heavily rely on the acquisition and utilization of extensive, high-quality datasets. However, procuring data from interactions between robots and their operating environments proves to be a daunting task. This is primarily due to the sheer variety and intricacy of these environments, compounded by the logistical and technical difficulties in capturing diverse, real-world data. This data bottleneck not only impedes the development process but also limits the potential for embodied AI robots to learn, adapt, and evolve in response to their surroundings.

Building Computing Systems for Embodied AI

Addressing these challenges requires a multi-faceted approach that focuses on achieving flexibility through layered software architecture, computational efficiency through innovative computer architecture, and scalability through automation of data generation.

2.1 Layered Software Stack to Achieve Flexibility

We believe that a layered software architecture is an effective way to provide the abstractions necessary to manage software complexity and thereby enable flexibility:

Control Adaptation: The control adaptation layer serves as a bridge between core software and diverse hardware, simplifying the integration of different sensors, actuators, and control systems. It abstracts low-level complexities, allowing developers to concentrate on behavior logic and decision-making algorithms, enhancing development efficiency. One example of the control adaptation layer is the stretch body library from Hello Robot [2].

Core Robotic Functions: upon the control adaptation layer sits the core robotic function library, containing packages for essential robot operations, from mobility to user interaction, offering developers rich, high-level interfaces. It ensures compatibility and flexibility across different hardware, significantly boosting development efficiency. One example of the core robotic function library is Meta’s home robot project which provides basic functions for robotic navigation and manipulation [3].

Robotic Applications: the application layer offers software interfaces, enabling the creation of complex intelligent applications. Utilizing large foundational models, developers can embed sophisticated AI applications into robots, enhancing their ability to understand and interact with their surroundings, examples of the robotic applications layer include RT-2 [4] and Clip [5].

2.2. Embodied AI Computer Architecture

A new architecture definition is required to provide computational efficiency for embodied AI applications. This architecture must integrate multiple multimodal sensors, provide efficient computational support for core robotic functions, and enable real-time processing of large model-based robotic applications.

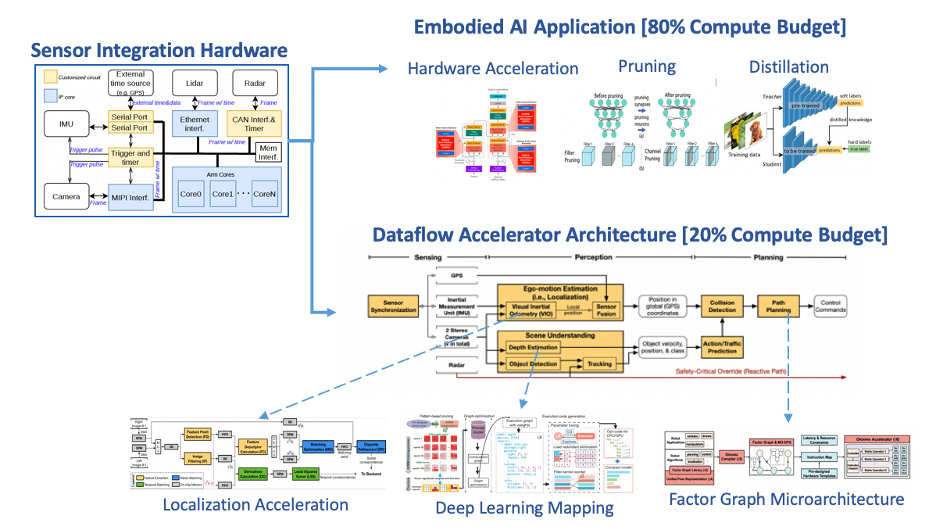

Figure 1: Computer Architecture for Embodied AI. Credit: Shaoshan Liu

Sensor Integration and Synchronization: Effective sensor integration and synchronization is critical, especially for robots with multiple multimodal sensors, because the quality of the sensor integration determines the quality of the sensor data. A hardware module must integrate more than ten sensors and provide a common timing source for accurate data acquisition and integration. An example of a sensor integration module can be found in [6].

Dataflow Accelerator Architecture: As discussed in our previous post, the computation of various robotic form factors follows the dataflow paradigm. Therefore, an effective way to improve the computational efficiency of embodied AI is to provide a Dataflow Accelerator Architecture [7] to accelerate the robotic core functions. Our goal is to develop an architecture that spends only 20% of the total system computational power on robotic core functions, leaving enough computational headroom for interesting embodied AI applications.

AI Agent Hardware Accelerator: Each embodied AI application is an independent AI agent. The core of AI Agent involves understanding and executing complex commands through visual language models (VLMs) or visual language action models (VLAMs). The core challenge is to execute these billion-parameter VLMs in real-time on edge computing platforms. On the hardware acceleration side, self-attentive hardware acceleration is key to enhance processing of diverse, high-dimensional data. On the software optimization side, techniques like pruning and quantization could compress model sizes by over 80% with minimal accuracy loss, while knowledge distillation may further reduce size by over 90%. With these optimizations combined we aim to enable real-time processing of a 70B-parameter model on platforms equivalent to NVIDIA Jetson AGX Xavier, paving the way for more responsive and intelligent robotic applications within the constraints of edge computing resources.

2.3. Design Automation for Embodied AI for Scalability

Designing, optimizing, and validating embodied AI systems require extensive data. Combining synthetic and real-world data addresses the scarcity of multi-scenario data. For instance, we can train a reinforcement learning controller in simulation with synthetic data, then apply small amount of real-world data to optimize the model and utilize transfer learning techniques to deploy the model in various real-world scenarios. We believe the development of embodied AI design automation pipeline through digital twin simulation is an effective way to address the data bottleneck to achieve scalability [8].

Conclusion

Developing computing systems for embodied AI represents the forefront of autonomous machine computing research. By addressing software integration challenges, optimizing computing architectures, and leveraging design automation, embodied AI robots are set to offer more sophisticated and efficient services in an array of complex environments. This multi-disciplinary endeavor opens new possibilities for rapid innovation in robotic technologies, paving the way for a future where robots seamlessly integrate into our physical world.

References

- Savva, M., Kadian, A., Maksymets, O. et al 2019. Habitat: A platform for embodied AI research. In Proceedings of the IEEE/CVF international conference on computer vision (pp. 9339-9347).

- Hello Robot Stretch Body, https://github.com/hello-robot/stretch_body/, accessed 2024/3/14.

- Meta Home Robot, https://github.com/facebookresearch/home-robot, accessed 2024/3/14.

- Brohan, A., Brown, N., Carbajal, J. et al, 2023. Rt-2: Vision-language-action models transfer web knowledge to robotic control. arXiv preprint arXiv:2307.15818.

- OpenAI Clip, https://openai.com/research/clip, accessed 2024/3/14.

- Liu, S., Yu, B., Liu, Y., et al, 2021, May. Brief industry paper: The matter of time—A general and efficient system for precise sensor synchronization in robotic computing. In 2021 IEEE 27th Real-Time and Embedded Technology and Applications Symposium (RTAS) (pp. 413-416). IEEE.

- Liu, S., Zhu, Y., Yu, B., Gaudiot, J.L. and Gao, G.R., 2021. Dataflow accelerator architecture for autonomous machine computing. arXiv preprint arXiv:2109.07047.

- Yu, B., Tang, J. and Liu, S.S., 2023, July. Autonomous Driving Digital Twin Empowered Design Automation: An Industry Perspective. In 2023 60th ACM/IEEE Design Automation Conference (DAC) (pp. 1-4). IEEE.

Shaoshan Liu’s background is a unique combination of technology, entrepreneurship, and public policy. He is currently a member of the ACM U.S. Technology Policy Committee, and a member of U.S. National Academy of Public Administration’s Technology Leadership Panel Advisory Group. Ding Ning is the Managing Dean of Shenzhen Institute of Artificial Intelligence and Robotics for Society (AIRS). His research focuses on embodied AI systems, their applications, and technology policy for AI.